使用RNN encoder-decoder学习短语表示用于机器翻译

使用单层RNN实现机器翻译,论文地址。

2.1 Introduction

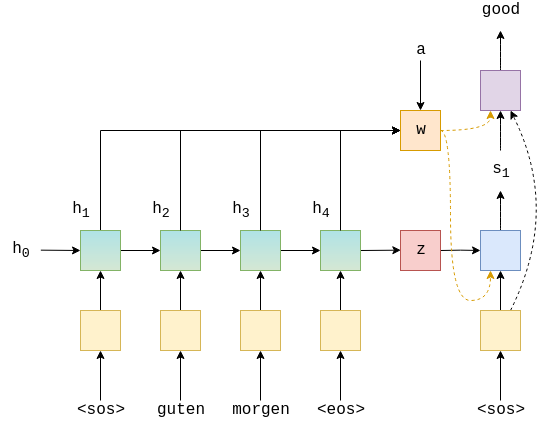

一个通用的seq2seq模型是:

上一个项目使用的是两层LSTM组成的seq2seq模型:

这个模型的缺点是,我们的解码器隐藏状态信息太多,解码的同时,隐藏状态会包含整个源序列的信息。

除此之外,本次还将使用GRU来代替LSTM。

2.2 准备数据

这部分的过程上次大致相同。

1

2

3

4

5

6

7

8

9

10

11

12

13

| import torch

import torch.nn as nn

import torch.optim as optim

from torchtext.datasets import Multi30k

from torchtext.data import Field, BucketIterator

import spacy

import numpy as np

import random

import math

import time

|

设置随机种子

1

2

3

4

5

6

7

| SEED = 1234

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

torch.cuda.manual_seed(SEED)

torch.backends.cudnn.deterministic = True

|

加载spacy的德语和英语模型

1

2

| spacy_de = spacy.load("en_core_web_sm")

spacy_en = spacy.load("de_core_news_sm")

|

之前我们颠倒了德语句子,这次不需要。

1

2

3

4

5

6

7

8

9

10

11

| def tokenize_de(text):

"""

Tokenizes German text from a string into a list of strings

"""

return [tok.text for tok in spacy_de.tokenizer(text)]

def tokenize_en(text):

"""

Tokenizes English text from a string into a list of strings

"""

return [tok.text for tok in spacy_en.tokenizer(text)]

|

建立field

1

2

3

4

5

6

7

8

9

| SRC = Field(tokenize=tokenize_de,

init_token='<sos>',

eos_token='<eos>',

lower=True)

TRG = Field(tokenize = tokenize_en,

init_token='<sos>',

eos_token='<eos>',

lower=True)

|

加载数据

1

2

| train_data, valid_data, test_data = Multi30k.splits(exts = ('.de', '.en'),

fields = (SRC, TRG))

|

建立词汇表

1

2

| SRC.build_vocab(train_data, min_freq = 2)

TRG.build_vocab(train_data, min_freq = 2)

|

选择设备,建立迭代器

1

2

3

4

5

6

7

8

| device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

BATCH_SIZE = 128

train_iterator, valid_iterator, test_iterator = BucketIterator.splits(

(train_data, valid_data, test_data),

batch_size = BATCH_SIZE,

device = device)

|

2.3建立seq2seq模型

2.3.1Encoder

encoder和上个项目的encoder类似,将多层LSTM换成了单层GRU。另外GRU只需要并返回隐藏状态,没有LSTM的单元状态。

从上面的公式看起来,RNN和GRU似乎是一样。但是在GRU的内部,有许多门控机制,它们控制信息流入和流出隐藏状态(类似于LSTM)。具体信息可以查看这篇文章

其他部分和上一个模型类似,循环计算序列的隐藏状态,返回上下文向量$z=h_T$。

这与通用seq2seq模型的Encoder相同,不同发生在GRU内(绿色)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| class Encoder(nn.Module):

def __init__(self, input_dim, emb_dim, hid_dim, dropout):

super().__init__()

self.hid_dim = hid_dim

self.embedding = nn.Embedding(input_dim, emb_dim)

self.rnn = nn.GRU(emb_dim, hid_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, src):

embedded = self.dropout(self.embedding(src))

outputs, hidden = self.rnn(embedded)

return hidden

|

2.3.2Decoder

Decoder则和上次的有很大不同,模型减轻了信息压缩。

GRU需要获取目标token$y_t$,上一个时间步隐藏状态$s_{t-1}$,和上下文向量$z$。

z没有下标,说明我们用的是encoder同一层的上下文状态。而预测$\hat{y}_{t+1}$是用一个全连接层处理当前token,$\hat{y}_{t}$和上下文向量$z$。

初始隐藏状态$s_0$仍然是上下文向量$z$,因此当我们生成第一个token时,实际上是输入了两个一样的上下文向量到GRU。

传入GRU的是$y_t$和$z$的串联,所以输入维度是emd_dim+hid_dim。

全连接层输入的是$y_t,s_t,z$的的串联,所以输入维度是emd_dim+hid_dim+hid_dim。

forward函数需要接受一个context参数。在前向传播时,我们将$y_t,z$连接为emb_con,再输入到GRU。我们将$y_t,s_t,z$cancat在一起输出,再经过全连接层输出预测$\hat{y}_{t+1}$

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

| class Decoder(nn.Module):

def __init__(self, output_dim, emb_dim, hid_dim, dropout):

super().__init__()

self.hid_dim = hid_dim

self.output_dim = output_dim

self.embedding = nn.Embedding(output_dim, emb_dim)

self.rnn = nn.GRU(emb_dim + hid_dim, hid_dim)

self.fc_out = nn.Linear(emb_dim + hid_dim * 2, output_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, input, hidden, context):

input = input.unsqueeze(0)

embedded = self.dropout(self.embedding(input))

emb_con = torch.cat((embedded, context), dim = 2)

output, hidden = self.rnn(emb_con, hidden)

output = torch.cat((embedded.squeeze(0), hidden.squeeze(0), context.squeeze(0)),

dim = 1)

prediction = self.fc_out(output)

return prediction, hidden

|

2.3.3seq2seq

在每个时间步:

output被创建来储存所有的预测$\hat{y}$

源句子,$X$被传入encoder来获取上下文向量

初始decoder隐藏状态被设置为上下文向量,即$s_0=z=h_T$

第一个输入使用一个batch的来代替

在decoder 的每个时间步:

2.4训练

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| INPUT_DIM = len(SRC.vocab)

OUTPUT_DIM = len(TRG.vocab)

ENC_EMB_DIM = 256

DEC_EMB_DIM = 256

HID_DIM = 512

ENC_DROPOUT = 0.5

DEC_DROPOUT = 0.5

enc = Encoder(INPUT_DIM, ENC_EMB_DIM, HID_DIM, ENC_DROPOUT)

dec = Decoder(OUTPUT_DIM, DEC_EMB_DIM, HID_DIM, DEC_DROPOUT)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = Seq2Seq(enc, dec, device).to(device)

|

1

2

3

4

5

| def init_weights(m):

for name, param in m.named_parameters():

nn.init.normal_(param.data, mean=0, std=0.01)

model.apply(init_weights)

|

1

2

3

4

| def count_parameters(model):

return sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f'The model has {count_parameters(model):,} trainable parameters')

|

1

2

3

4

| optimizer = optim.Adam(model.parameters())

TRG_PAD_IDX = TRG.vocab.stoi[TRG.pad_token]

criterion = nn.CrossEntropyLoss(ignore_index = TRG_PAD_IDX)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

| def train(model, iterator, optimizer, criterion, clip):

model.train()

epoch_loss = 0

for i, batch in enumerate(iterator):

src = batch.src

trg = batch.trg

optimizer.zero_grad()

output = model(src, trg)

output_dim = output.shape[-1]

output = output[1:].view(-1, output_dim)

trg = trg[1:].view(-1)

loss = criterion(output, trg)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), clip)

optimizer.step()

epoch_loss += loss.item()

return epoch_loss / len(iterator)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| def evaluate(model, iterator, criterion):

model.eval()

epoch_loss = 0

with torch.no_grad():

for i, batch in enumerate(iterator):

src = batch.src

trg = batch.trg

output = model(src, trg, 0)

output_dim = output.shape[-1]

output = output[1:].view(-1, output_dim)

trg = trg[1:].view(-1)

loss = criterion(output, trg)

epoch_loss += loss.item()

return epoch_loss / len(iterator)

|

1

2

3

4

5

| def epoch_time(start_time, end_time):

elapsed_time = end_time - start_time

elapsed_mins = int(elapsed_time / 60)

elapsed_secs = int(elapsed_time - (elapsed_mins * 60))

return elapsed_mins, elapsed_secs

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| N_EPOCHS = 10

CLIP = 1

best_valid_loss = float('inf')

for epoch in range(N_EPOCHS):

start_time = time.time()

train_loss = train(model, train_iterator, optimizer, criterion, CLIP)

valid_loss = evaluate(model, valid_iterator, criterion)

end_time = time.time()

epoch_mins, epoch_secs = epoch_time(start_time, end_time)

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), 'tut2-model.pt')

print(f'Epoch: {epoch+1:02} | Time: {epoch_mins}m {epoch_secs}s')

print(f'\tTrain Loss: {train_loss:.3f} | Train PPL: {math.exp(train_loss):7.3f}')

print(f'\t Val. Loss: {valid_loss:.3f} | Val. PPL: {math.exp(valid_loss):7.3f}')

|

训练结果:

Epoch: 01 | Time: 0m 23s

Train Loss: 5.058 | Train PPL: 157.219

Val. Loss: 5.305 | Val. PPL: 201.323

Epoch: 02 | Time: 0m 23s

Train Loss: 4.399 | Train PPL: 81.338

Val. Loss: 5.026 | Val. PPL: 152.384

Epoch: 03 | Time: 0m 23s

Train Loss: 4.054 | Train PPL: 57.643

Val. Loss: 4.671 | Val. PPL: 106.856

Epoch: 04 | Time: 0m 23s

Train Loss: 3.672 | Train PPL: 39.333

Val. Loss: 4.161 | Val. PPL: 64.160

Epoch: 05 | Time: 0m 23s

Train Loss: 3.308 | Train PPL: 27.339

Val. Loss: 3.913 | Val. PPL: 50.047

Epoch: 06 | Time: 0m 23s

Train Loss: 2.974 | Train PPL: 19.573

Val. Loss: 3.781 | Val. PPL: 43.870

Epoch: 07 | Time: 0m 23s

Train Loss: 2.728 | Train PPL: 15.305

Val. Loss: 3.621 | Val. PPL: 37.372

Epoch: 08 | Time: 0m 23s

Train Loss: 2.467 | Train PPL: 11.791

Val. Loss: 3.553 | Val. PPL: 34.933

Epoch: 09 | Time: 0m 23s

Train Loss: 2.247 | Train PPL: 9.462

Val. Loss: 3.559 | Val. PPL: 35.121

Epoch: 10 | Time: 0m 23s

Train Loss: 2.071 | Train PPL: 7.932

Val. Loss: 3.499 | Val. PPL: 33.075